A Brief History of Roomy's Architectures

An overview of Roomy’s architectural iterations, from past to present.

It's been one month since we released Roomy Alpha 5, where we eluded to working on yet another backend re-design for Roomy. We've been hard at work on a proof-of-concept and so far things have been going really well.

Before getting into the new architecture, though, I think it's good to do a quick retrospective on how we landed here.

Roomy's architecture has undergone many different revisions. Most of the fundamental design tenets carried on in Roomy were first conceptualized four years prior in the Matrix-based Commune project.

My own involvement started with our efforts on the Weird app.

The basic idea behind Weird was to give you a simple way to get started with your own personal web pages on your own domain, coupled with web-identity. Something like Linktree but with the added ability to form connections and help you find other people working on similar things.

It was really important to us, even for a simple app like Weird, to start with an architecture that could be:

- Local first, so that you could edit your data even while you were offline, and

- Federated, in that there didn't have to be just "one" Weird that everybody had to use if you wanted to be connected.

These were actually not easy at all to accomplish and started a journey of learning and experimentation with distributed protocols.

The Willow Protocol

After a lot of looking around, the protocol that seemed like it did exactly what we needed was the Willow Protocol. But its implementation wasn't ready yet, so we made our own "fake" version of it that would get us just far enough to make Weird work. The plan was to switch to the "real" Willow as soon as it was ready.

Willow would allow us to replicate bytes, but it didn't say much about how those bytes were formatted, so that birthed the early ideas for our own Leaf Protocol, which, at the time, was really more of a data interoperability format than a networking protocol.

Anyway, we spent 6+ months developing on this idea for Weird before making a pre-release of it in December of 2024.

Keyhive

Around that time we were running into the need to do revisions for content in Weird. This led us into the CRDT rabbit hole. CRDTs were a way to track revisions on almost any kind of data and provide optionally realtime collaboration features, too.

CRDTs are possible to store on the Willow Protocol, but it's not particularly convenient or efficient. That's when a couple different people pointed out Keyhive, which was actually similar to Willow in several important ways, but was also CRDT-native.

This was very compelling, and after doing some digging, I really liked how elegant the Keyhive design was. We thought we could also adapt our Leaf Protocol data format ideas to work with Keyhive.

This coincided with some other interesting discussions around the AT Protocol, and the realization that both Willow and Keyhive were hypothetically suitable for realtime chat.

We realized that there was a big opening here for something that was sorely needed, a truly usable group chat that supported owning your data and federating with others. I took a week to do an experiment called Pigeon to get a chat app working with ATProto login, and it went well.

We decided to pivot from Weird to working on what would become Roomy, with the understanding that Weird could eventually run on the same protocol that Roomy was running on. This is still the plan for Weird once Roomy gets more stable.

Unfortunately, we kind of had the same problem with Keyhive that we had with Willow: its implementation wasn't ready yet, so we were still running on a "fake" version we made ourselves.

After we released an MVP for Roomy we were running into problems with our hand-rolled, completely temporary sync system. We realized that we were going to need something better than our temporary solution soon, and unfortunately we needed it before Keyhive was going to be ready.

Jazz

This is when Jazz entered the picture. Jazz was doing a lot of the things that Willow and Keyhive would have been doing for us. The big thing we needed from all of these protocols was local-first + access control. Not an easy thing.

Keyhive was theoretically more peer-to-peer oriented than Jazz, and I thought it was uniquely elegant, but Jazz was working right now, and we needed something right now.

We made the decision to port Roomy from our temporary solution to Jazz with the possibility of switching to Keyhive later, if Jazz didn't just end up sticking as a final solution.

This was a major effort and for the first pass it looked like things would be working really well. After we started working on the Discord bridge, though, we ran into some problems which are described in more detail in our alpha 5 post.

This triggered a lot of thinking about what we really needed to work in Roomy and how we might be able to accomplish our needs with simpler tech that we could tailor to our exact needs.

Leaf 0.3 - The Latest Architecture

Finally we reach today, where we have a proof-of-concept of a new system we designed ourselves, essentially from scratch. With all of the reworks in the last several months we've had to pinch ourselves to make sure that we weren't losing our minds thinking we should do another rewrite, but we were feeling the weight of necessity on what was hopefully a last big redo.

Me and Meri had a lot of design discussions and, after the alpha 5 release, we both started work on the next POC. We now have a design that is quite simple and, importantly, we are able to adapt it to the needs we have right now. Unlike our earlier hand-rolled and temporary sync solution, we plan on making this solution actually ready for beta, and then production usage. It's made so that we can start simple and add more sophisticated features gradually as necessary.

While we had performance problems importing messages from our Discord servers into Jazz, with imports taking hours and me having to restart the script several times because of out-of-memory crashes, the new system can import one of our Discord spaces in 27 seconds.

The sync server is also extremely lightweight, peaking at around 65MiB of memory.

I'm very happy with the initial performance and I'm hopeful about maintaining that and even improving it as we add the features that we need.

Lets do a quick overview of how the new architecture works.

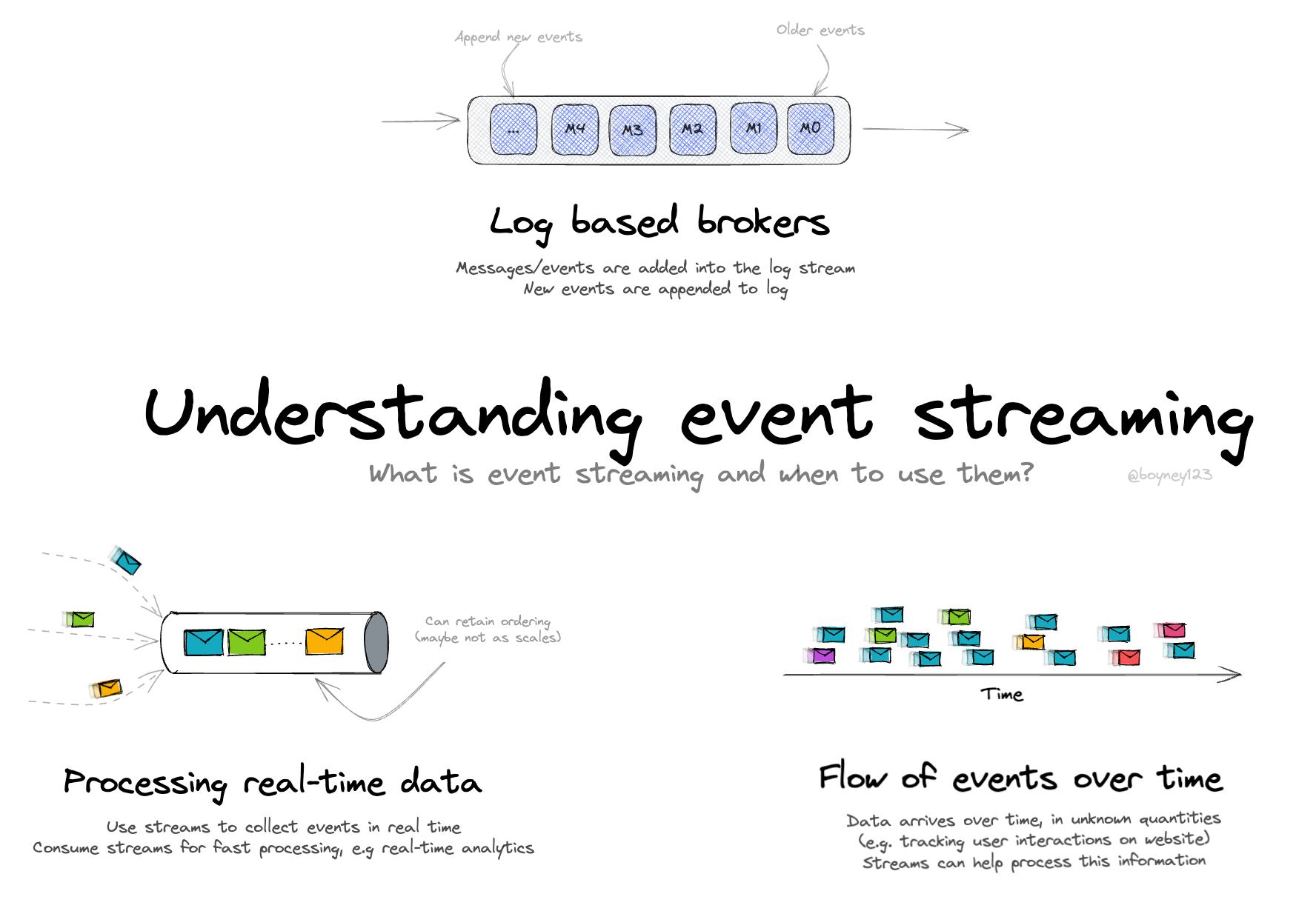

Event Streams

Each roomy chat space is now an event stream, and every action you can take in the space is an event. The streams are stored in their own SQLite database on our sync server.

Each stream has a WASM module along with another SQLite cache database, and its job is to control what events come in and out of the stream. Each stream can have a different WASM module, making the sync server generic and usable for different use-cases with different auth strategies.

Because the WASM module can control which events a user is allowed to read it provides a mechanism for privacy, which is important, even if clients additionally want to encrypt the events to hide them from the server.

Right now all clients authenticate to the sync server with ATProto. It is very easy to integrate other auth solutions if we want to later, and it is also possible to integrate Keyhive's authorization and encryption into a stream's WASM module, which we are going to try later to create encrypted, private groups.

Clients can subscribe to the event stream and query old events, giving us what we need for realtime chat. This is very simple, but the catch is that it's not necessarily easy to use a raw event stream in the UI, compared to Jazz which has UI sync built-in.

SQLite in the Browser

To fill this need to query chat data in the browser, we used SQLite again!

When you join a Roomy space it will download events from the stream and convert them to records in the database that can be efficiently queried from the UI.

Using SQLite, with its fully featured queries and indexes, allows us to access the chat data that we needed way faster than we could with previous solutions. We can load over six thousand chat messages fast enough so that you don't notice the delay when switching to to a new channel.

We still need to optimize things so that it isn't loading the entire chat history when you click on a channel, but it is incredible that you can now scroll through a multi-year channel history without any frame skipping or delays whatsoever.

Caveats

The major caveat right now is that joining a space requires you to download the entire space history. On a fast network connection, the new system can download around 57k messages totaling about 27MB in something like 8-15 seconds, but that's quite a while to wait to join a new space, and slower connections will be a lot worse.

Also, you don't really want to download the entire history for all chat spaces you join. You only want that for personal spaces, or maybe ones where you are an admin or that you are very involved in and want a local backup of.

Luckily this problem is very solvable, we just have to hook up a way to index messages on the sync server based on channel and separate core space metadata such as the channel list from the messages so that you can download only the latest messages. We'll be resolving this issue soon.

Summary

We've made great progress on our new architecture in the last month and have made incredible performance gains on some benchmarks that are very important to our use-case.

We have a subset of the Roomy UI working with the backend, and we are currently working on restoring more of its functionality while fixing bugs and developing the new message schema.

It is extremely unstable and the links may not last, but we have an experimental demo instance of the new Roomy code along with a partially imported test space with some of our Muni Town chat history.

We are going to be working hard on getting the next release out and hope to have more news on that soon!